This is a stark contrast to what today’s consumers demand: seamless, hyper-relevant experiences. Personalization can drive a 10–15% revenue lift on average, but only if your AI is grounded in the right data. Pretrained models and synthetic data alone won’t get you there. The missing piece is real-world consumer behavior.

Today’s foundation models (from recommender systems to GPT-style chatbots) come pre-trained on vast swaths of internet text or curated datasets. They’re great at general pattern recognition, but they have no inherent knowledge of your customers. These models don’t learn who your users are, what those users did yesterday, or why one customer’s intent may differ from another’s. As a result, a pretrained AI will default to averages and assumptions — not individualized reasoning.

Synthetic data was supposed to help bridge this gap by generating artificial training examples. But while useful for privacy-safe testing, synthetic data lacks the genuine variability and nuance of actual human behavior. It’s not just less accurate — it’s misleading. Worse, many “real-world” data panels are equally flawed: dated, sparse, based on convenience sampling, and often riddled with compliance risk.

If your enterprise is relying solely on CRM logs, website clickstreams, or third-party panels, you’re giving your AI a single, narrow view of a multidimensional customer. And that makes your AI easy to beat.

To truly reason about a user’s needs or predict their next move, an AI model needs context from that user’s real-world behavior. Without it, even the most advanced algorithms can stumble.

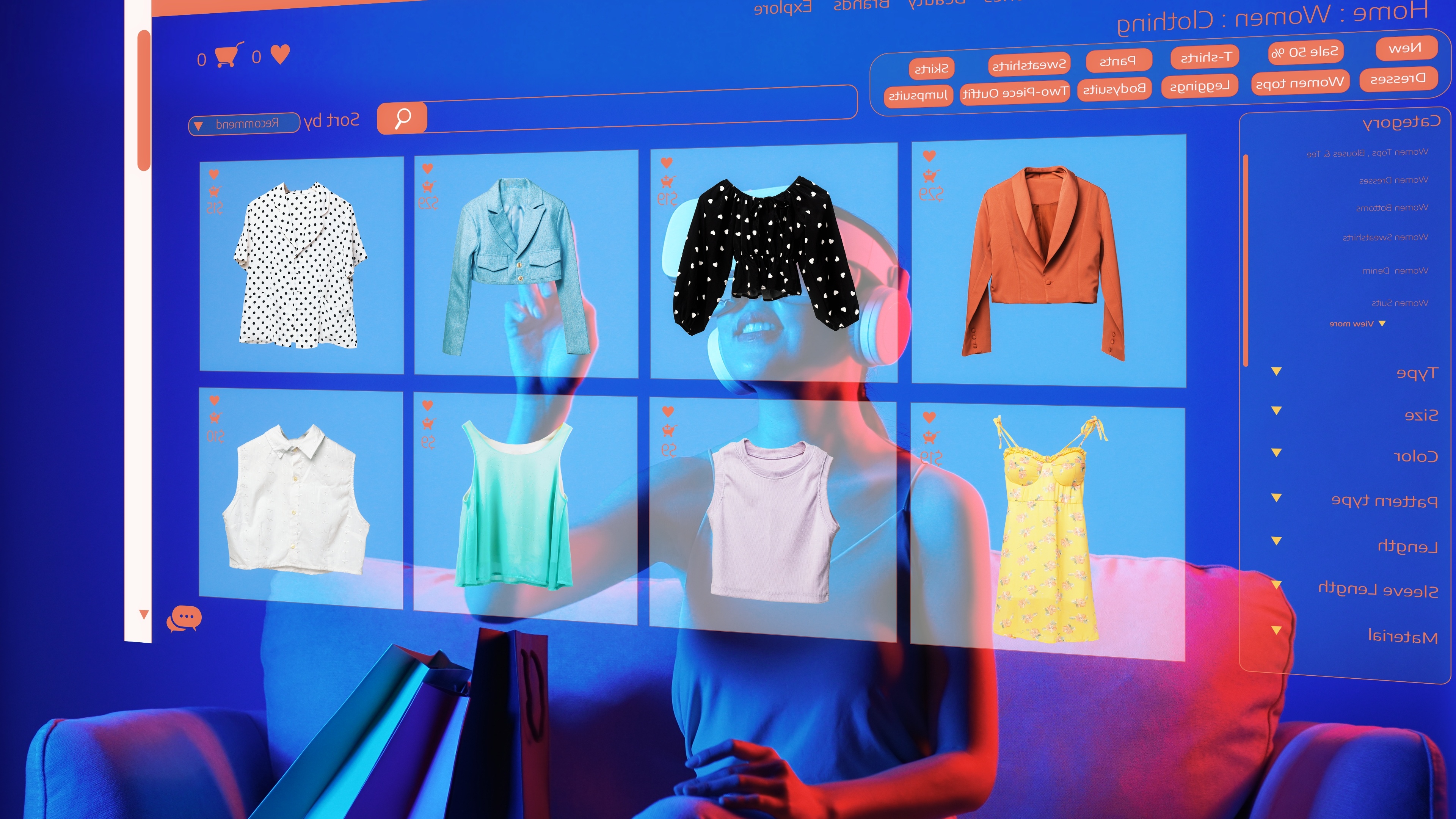

Picture a recommendation engine suggesting a product a customer just bought last week. Or a financial chatbot giving tone-deaf advice because it doesn’t know a user’s spending habits. These are not corner cases — they’re daily occurrences when models are blind to real behavior.

Large language models, in particular, struggle to personalize in the absence of behavioral memory. They’re powerful pattern matchers, but without persistent, individual signals, they default to surface-level generalizations.

AI needs continuity. It needs a memory of what users did before, across different domains and timelines, to deliver reasoning that feels contextual, relevant, and personal. Without that, your AI is just another shallow interface with no depth.

This is where real-world, behavioral data makes the difference. Longitudinal, multi-dimensional datasets don’t just show you what a customer did — they reveal why, and how often, and what changed over time.

With these signals, your models stop guessing. They begin to understand:

If your AI models are making strategic decisions — recommendations, forecasts, risk assessments, personalization — then you need this data. Not sometimes. Always.

AnthologyAI was built to solve this very problem. It offers enterprises a live, consented hyper-panel of real-world consumer behavior — at scale.

What does that mean in practice?

AnthologyAI gives you a structured, high-resolution feature space to plug into your LLMs, recommender systems, and forecasting models — all via API or raw data feeds.

Whether you're building a personalization engine, a next-gen copilot, or a predictive market signal, AnthologyAI gives your AI the behavioral substrate it needs to reason like a human — because it finally sees one.

Too many enterprise AI systems are trained in a vacuum. They’re armed with pretrained weights and synthetic demos, but starved of the real-world behavioral data they need to reason, predict, or personalize.

It’s time to fix that. Stop guessing. Start observing.

If your AI doesn’t understand what your customers are actually doing in the real world — what they buy, watch, spend, and skip — then your models are not learning. They’re hallucinating.

The future belongs to AI that can reason. And reasoning requires behavior.